前言

Harris算法和Shi-Tomasi 算法,由于算法原理,具有旋转不变性,在目标图片发生旋转时依然能够获得相同的角点。但是如果对图像进行缩放以后,再使用之前的算法就会检测不出来,如图:

在2004年,University of British Columbia 的 D.Lowe 在他的论文 Distinctive Image Features from Scale-Invariant Keypoints 中提出了一个新的算法,Scale Invariant Feature Transform (简称SIFT),它可以提取关键点及计算其描述符。OpenCV的文档指出这篇论文容易理解,推荐阅读。

SIFT算法主要有4个步骤,详情请见文末的相关参考。

流程

1. 尺度空间极值检测(Scale-space Extrema Detection)

2. 关键点定位(Keypoint Localization)

3. 方向分配(Orientation Assignment)

4. 关键点描述符(Keypoint Descriptor)

使用

SIFT 算法是一个有专利的算法,在 OpenCV3 中,SIFT 等算法被剥离,放在了 opencv-contrib 这个包的 xfeatures2d 里面。opencv-contrib 可以通过 pip 安装:

pip install opencv-contrib-python实例

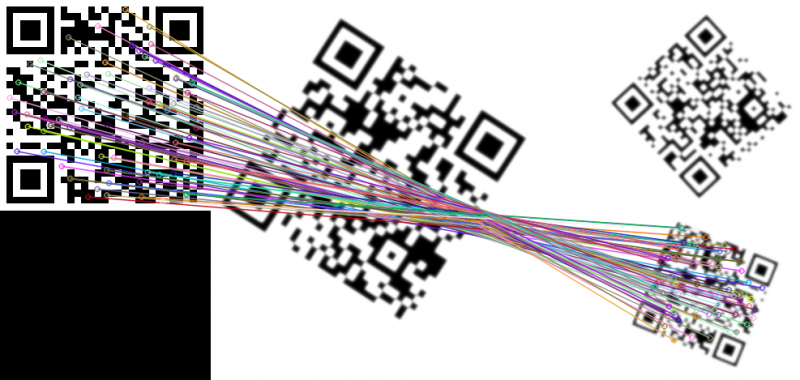

从3个二维码中找到 pattern 二维码:

#!/usr/bin/python3

import cv2

def sift_alignment(image_1: str, image_2: str):

"""

Aligns two images by using the SIFT features.

Step 1. The function first detects the SIFT features in I1 and I2.

Step 2. Then it uses match(I1,I2) function to find the matched pairs between

the two images.

Step 3. The matched pairs returned by Step 2 are potential matches based

on similarity of local appearance, many of which may be incorrect.

Therefore, we do a ratio test to find the good matches.

Reference: https://docs.opencv.org/3.4.3/dc/dc3/tutorial_py_matcher.html

Parameters:

image_1, image_2: filename as string

Returns:

(matched pairs number, good matched pairs number, match_image)

"""

im1 = cv2.imread(image_1, cv2.IMREAD_GRAYSCALE)

im2 = cv2.imread(image_2, cv2.IMREAD_GRAYSCALE)

sift = cv2.xfeatures2d.SIFT_create()

key_points_1, descriptors_1 = sift.detectAndCompute(im1, None)

key_points_2, descriptors_2 = sift.detectAndCompute(im2, None)

bf_matcher = cv2.BFMatcher() # brute force matcher

# matches = bf_matcher.match(descriptors_1, descriptors_2) # result is not good

matches = bf_matcher.knnMatch(descriptors_1, descriptors_2, k=2)

# Apply ratio test

good_matches = []

for m,n in matches:

if m.distance < 0.6 * n.distance: # this parameter affects the result filtering

good_matches.append([m])

match_img = cv2.drawMatchesKnn(im1, key_points_1, im2, key_points_2,

good_matches, None, flags=2)

return len(matches), len(good_matches), match_img

matches, good_matches, match_img = sift_alignment('pattern.png', 'test.png')

cv2.imwrite('match.png', match_img)

运行以上代码,得到以下结果:

相关参考

1. OpenCV 文档, https://docs.opencv.org/3.4.3/da/df5/tutorial_py_sift_intro.html

2. OpenCV 文档, https://docs.opencv.org/3.4.3/dc/dc3/tutorial_py_matcher.html

3. python opencv之SIFT算法示例, https://www.jb51.net/article/135366.htm

发表评论